Reinforcement Learning for Schedule Optimization

A cutting-edge AI model that combines reinforcement learning and graph embeddings to optimize construction planning and scheduling under real-world uncertainty.

Technical Overview

The Problem

Construction planning faces constant challenges due to limited budgets, fluctuating resources, and unpredictable environments. Traditional tools often struggle to provide flexible, intelligent decision-making support in such dynamic conditions, especially when it comes to activity sequencing and resource allocation.

The Innovation

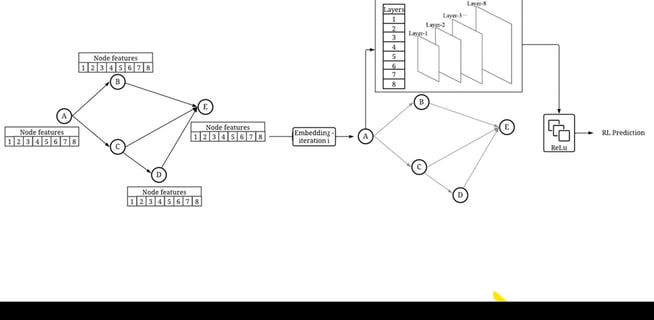

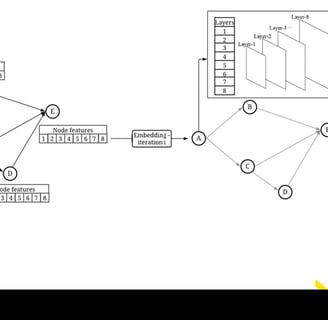

This project introduces a novel hybrid model that combines reinforcement learning and graph embedding networks to support complex construction planning decisions. By simulating real-world planning scenarios using agent-based modeling, the system captures the evolving nature of construction projects and delivers optimized scheduling solutions.

Key Benefits

Offers dynamic, data-driven support for sequencing construction tasks

Efficiently models real-world constraints, such as labor and material availability

Adapts to uncertainty, improving timeline accuracy and risk management

Empowers planners with a decision-making tool grounded in AI

Demonstrated effectiveness through real-world case studies

How It Works

The model uses reinforcement learning to learn optimal scheduling policies over time, while graph embedding captures structural dependencies in work breakdown structures. Together, they allow the system to simulate realistic project scenarios and adaptively recommend scheduling decisions—even under uncertain or shifting conditions.

My Role

I developed and integrated the hybrid model, designed the agent-based simulation environment, and validated the system using real-world construction data. My focus was on achieving both high decision quality and computational efficiency, ensuring practical applicability for industry professionals.